Offline Translation is an iOS app that translates text from photos without using cloud services. It enables users—especially travelers or people in low-connectivity areas—to take or upload a picture, automatically detect the language, and receive a translation in their chosen language.

Ideal for travelers who need to translate road signs, menus, or business signs in areas with poor or no internet connectivity.

Apple and Google both offer offline translation apps. These rely on downloading "language kits" for each supported language pair. Offline Translation evaluates whether performance can be improved with alternative local models or architectures.

1/5 – This app is not commercially competitive with Apple/Google offerings and is primarily built for technical exploration.

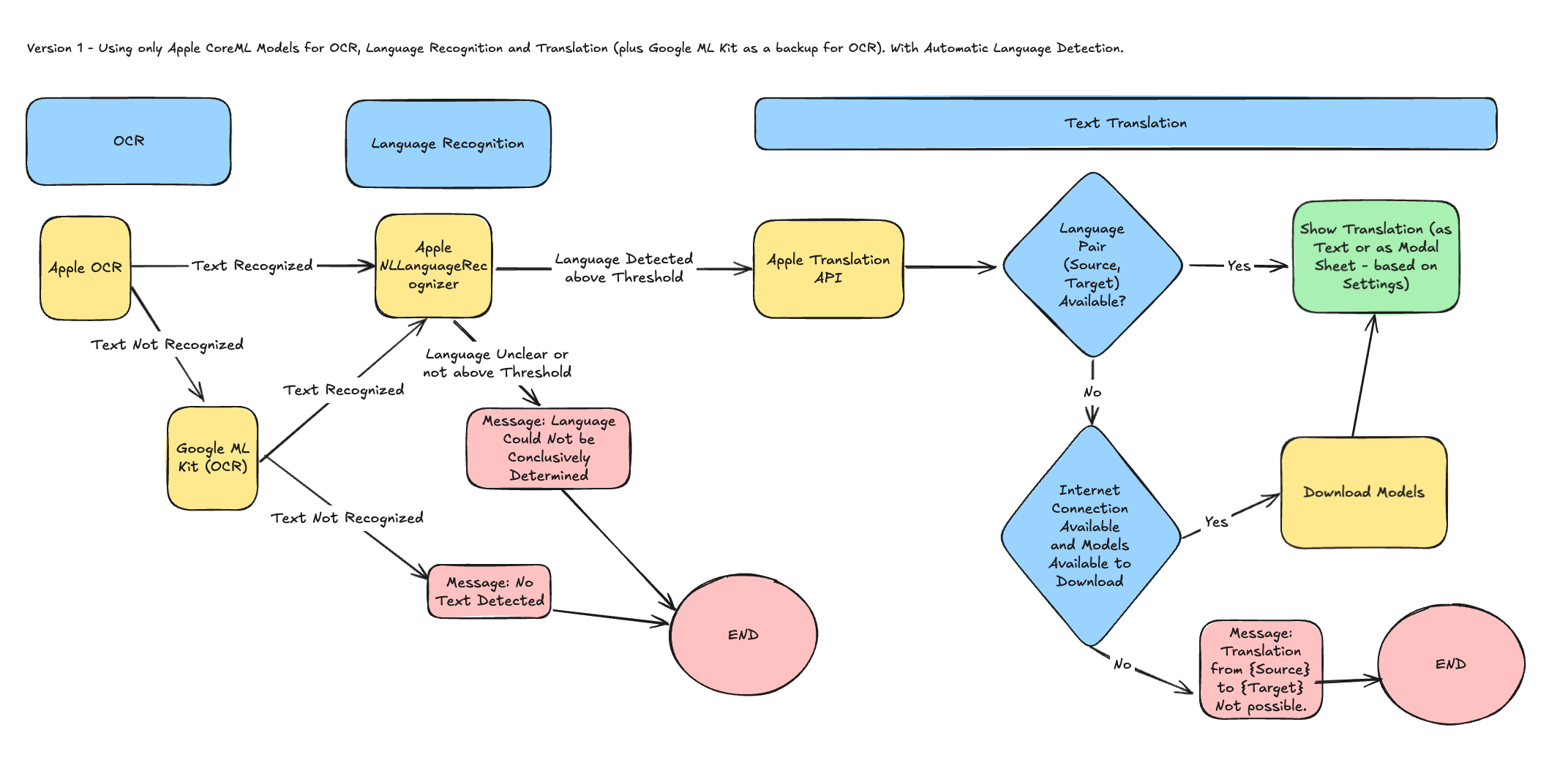

CoreML, Vision, Language, and Translation frameworksThe app will be developed in three versions with the same UI:

(In progress)